Label Attention is a kind of attention mechanism in deep learing, which integrates label feature attention. In this tutorial, we will use an paper: A Label Attention Model for ICD Coding from Clinical Text to introduce it.

What is label attention?

We usually compute an attention as follows:

\[u=V^Ttanh(h \odot w+b)\]

Here \(h\) is our input, for example:

\(h\) is a matrix with shape 50*200

\(w\) is a learning weight with shape 200*200

\(V\) is a learning weight with shape 200*1

It means we will get a ouput \(u\), the shape of which is 50*1. Then, we can use a softmax() function get attention score.

However, we can find the attention mechanism above does not contain any label information if you plan to use attention mechanism in a classification problem or label problem.

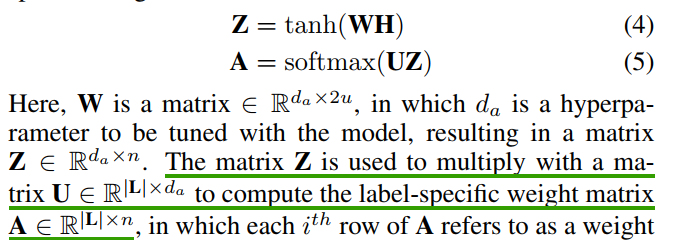

In paper: A Label Attention Model for ICD Coding from Clinical Text, it added a label representation to attention.

For example, if we will use attention in a classification, the class count or label count is 10.

Here we will create a label representation \(V\), it is 200*10.

Comparing to conventional attention mechanism, the \(V\) is 200*1 not 200*10.

How to use label attention?

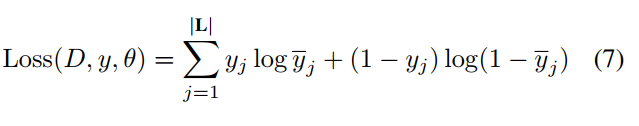

In this paper, if the model input is 50*200, it will output a 10*200 matrix, not 1*200. Then we can sum all loss value in each label to train model as this paper:

Here L is the length of label.

However, this loss function is not completed. Because:

(1) How \(U\) reflects the difference of different labels?

(2) This label attention proposed in this paper can not be used in deep model.

For example: a deep model has used 5 attention layers, how to use this label attention method in 5 layers.

The most imporant is sum all loss value in each label may have negative impact to final accuracy. It may be much poor than conventional attention mechanism. It means this label attention proposed in this paper is not a good method, especially the loss function is a bad method.