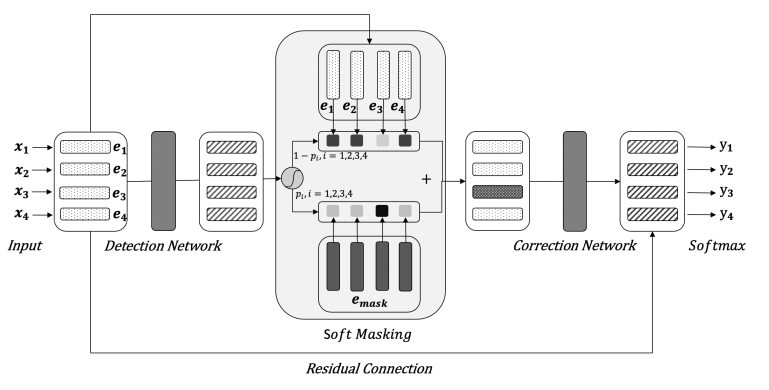

In NLP, we do not mask any input embeddings for Bert in text classifcation task. However, in paper: Spelling Error Correction with Soft-Masked BERT proposed a masked method.

Soft-Masked Bert

BERT does not have sufficient capability to detect whether there is an error at each position. This paper proposed a method that uses [MASK] to represent error word.

How to get masked inputs for Bert

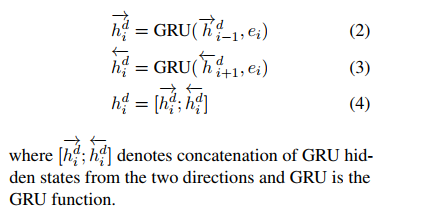

Step 1. use a GRU to encode input

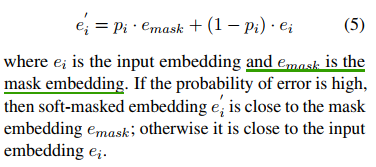

Step 2: get masked input embeddings

Limitations

This soft-masked method is not a good one, for example, in aspect level sentiment, we also can use [MASK] to mark aspect words. However, it is insufficient.

As to sentence:

This price is low.

This quality is low.

price and quality are aspect terms, we will marsk them.

This [MASK] is low.

This [MASK] is low.

It will give you bad performance, which means we should use more kinds of marsked symbols.