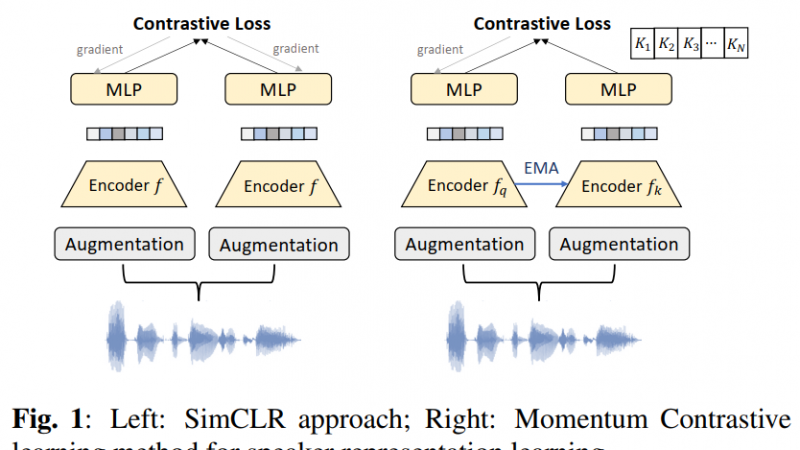

SimCLR uses samples in the current mini-batch for negative example mining. The negative sample size is constrained with the minibatch size N.

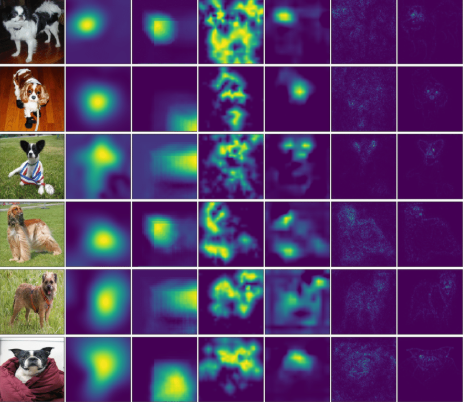

Contrastive learning in the latent space has recently shown great promise, which aims to make the representation

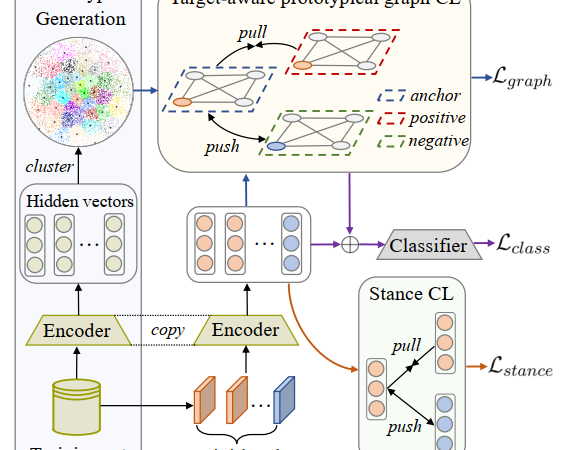

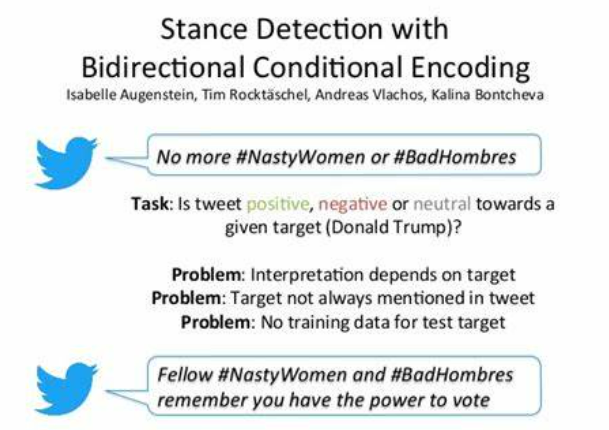

Zero-shot stance detection (ZSSD) aims to detect stance for destination unseen targets by learning stance features from known targets

Chen et al. (2018) proposed ADAN, an architecture based on a feed-forward neural network with three main components

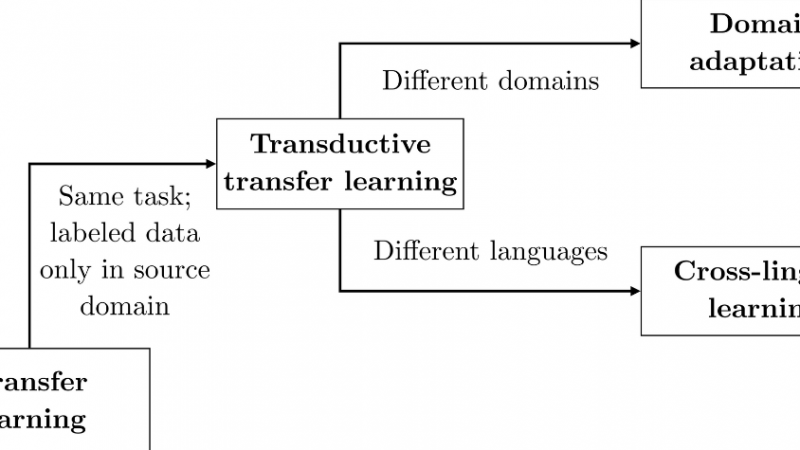

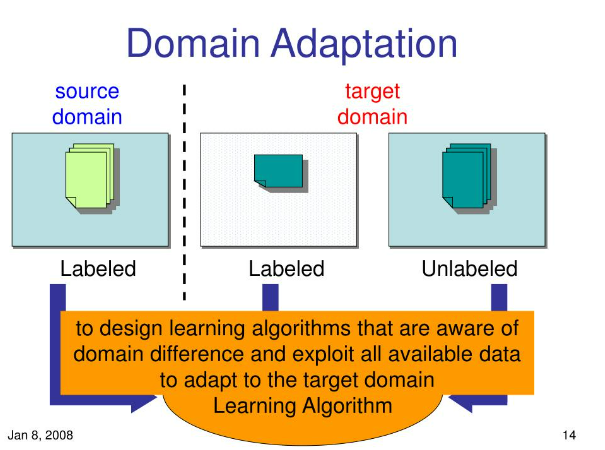

Several works employed domain adaptation to improve performance.

Devlin et al. (2019) produced 2 BERT models, for English and Chinese. To support other languages, they trained a multilingual BERT (mBERT)

An integral part involved in developing various PLMs is providing NLU multitask benchmarks used to demonstrate the linguistic abilities of new models and approaches

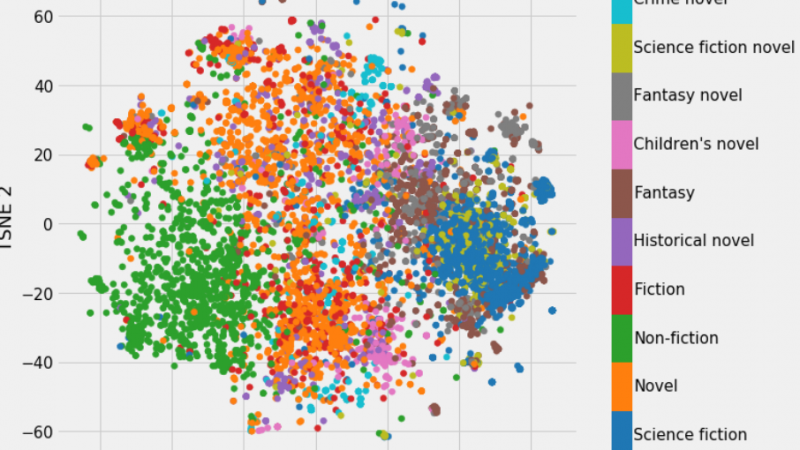

Recent studies on the geometric properties of contextual embedding space have observed that the distribution of embedding vectors is far from isotropic and occupies a relatively narrow cone space

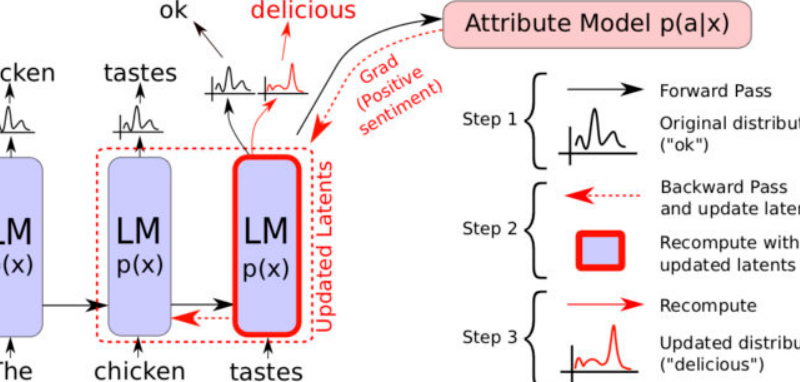

Neural language generative models process text generation tasks as conditional language modeling

Gradient-based methods provide alternatives to attention weights to compute the importance of a specific input feature.