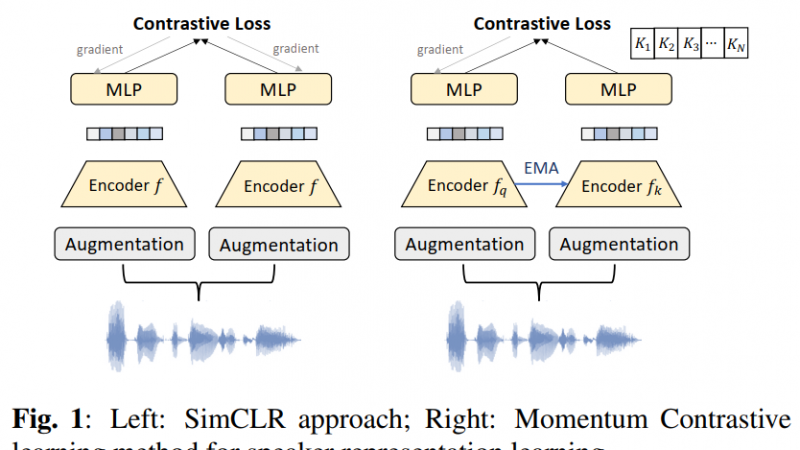

SimCLR uses samples in the current mini-batch for negative example mining. The negative sample size is constrained with the minibatch size N.

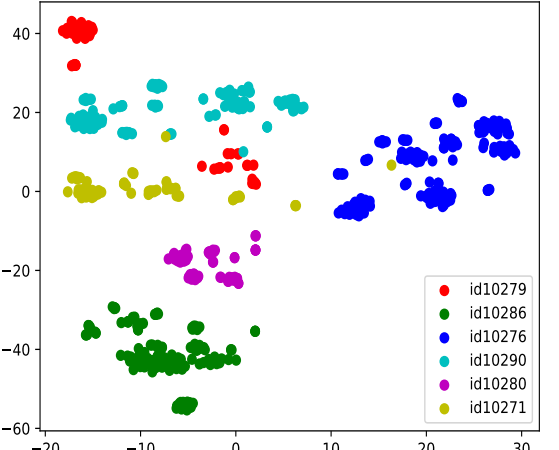

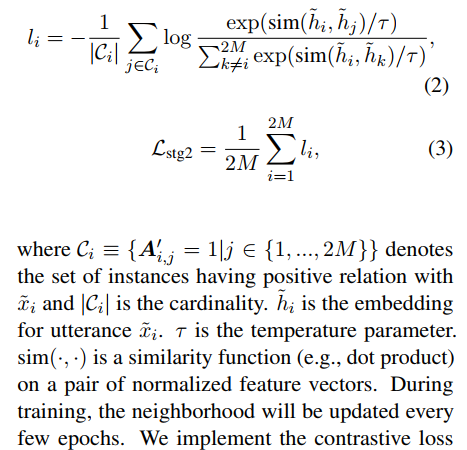

Contrastive learning in the latent space has recently shown great promise, which aims to make the representation

SimCLR directly maximizes the similarity between augmented positive pairs and minimizes the similarity of negative pairs

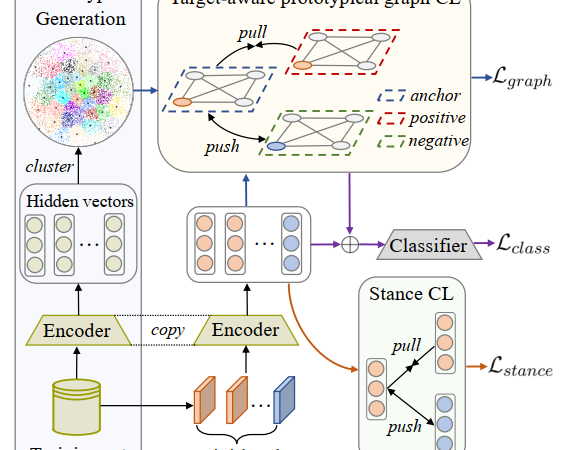

Contrastive learning is a supervised method, which is a good way to improve the text clustering. In this tutorial, we will introduce what it is.