DC-Bi-LSTM is a tensorflow implementation of the paper: Densely Connected Bidirectional LSTM with Applications to Sentence Classification. This model can allow us to build a deep Bi-LSTM networks by avoiding the vanishing-gradient and overfitting problems.

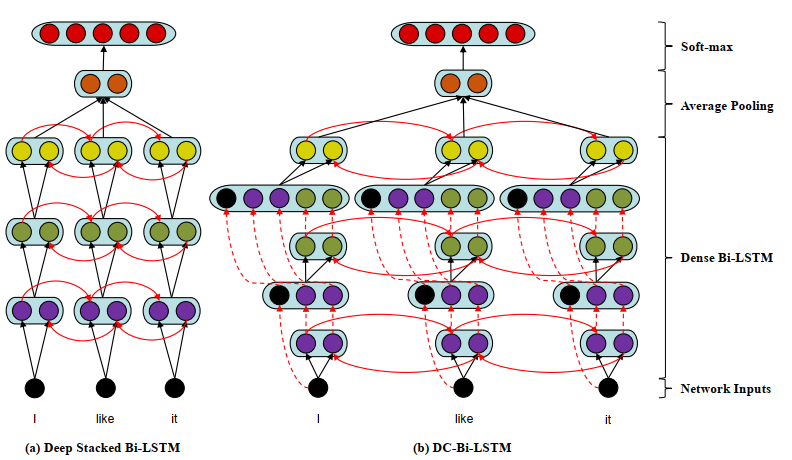

The structure of DC-Bi-LSTM

The DC-Bi-LSTM looks like:

Datasets used in DC-Bi-LSTM

DC-Bi-LSTM has used several dataset to train. These datasets include: MR (Movie Review Data), SST-1 (Stanford Sentiment Treebank), SST-2, Subj, TREC.

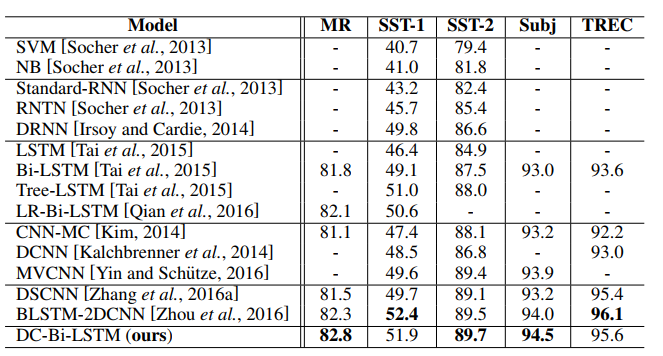

Comparable Results of DC-Bi-LSTM

There are several results of DC-Bi-LSTM in the papaer, we will give a comparable one.

From the result, we can find DC-Bi-LSTM is better than LSTM, Bi-LSTM, Tree-LSTM and other methods. However, we should notice this result is gained by DC-Bi-LSTM with 15 layers.

How to use DC-Bi-LSTM?

Step 1: Download glove vector

$ cd dataset $ ./download_emb.sh

Step 2: Build datasets

$ cd dataset $ python3 prepro.py

Step 3: Train model

$ python3 train_model.py --task <str> --resume_training <bool> --has_devset <bool> # eg: $ python3 train_model.py --task subj --resume_training True --has_devset False

Source code is here: DC-Bi-LSTM Source Code Download

Moreover, we also can use attention mechanism to improve DC-Bi-LSTM. Here is a tutorial:

An Improvement to Densely Connected Bidirectional LSTM – LSTM Tutorial