Layer Normalization can prevent model over-fitting and speed up the model training. In this tutorial, we will introduce this topic.

Layer Normalization

Layer Normalization is widely used in LSTM, GRU and BiLSTM. Here is a tutorial:

An Explain to Layer Normalization in Neural Networks – Machine Learning Tutorial

In tensorflow, we can use tf.contrib.layers.layer_norm() function to normalize a layer.

Layer Normalization Explained for Beginners – Deep Learning Tutorial

We will use the output of BiLSTM as an example.

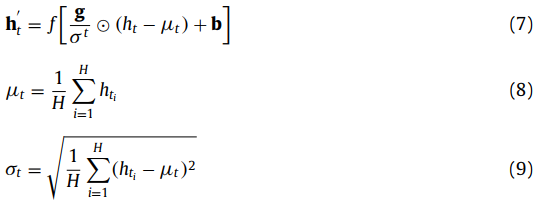

Suppose the output of a BiLSTM is: \(h_t\). Its layer normalization can be computed as follows:

Here \(\mu_t\) and \(\sigma_t\) are the mean and variance of \(h_t\), \(g\) and \(b\) are scale and offset parameters.

As to LSTM and GRU, we also can compute their layer normalization as above, because all of them are time sequence model.