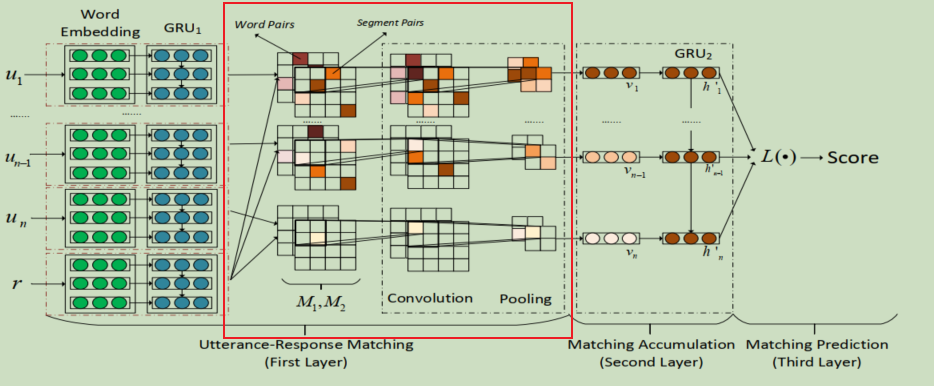

In this article, we will introduce a method that use word or sentence similarity matrix for classification.

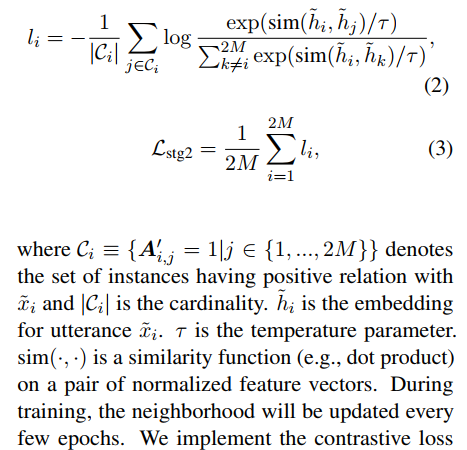

Contrastive learning is a supervised method, which is a good way to improve the text clustering. In this tutorial, we will introduce what it is.

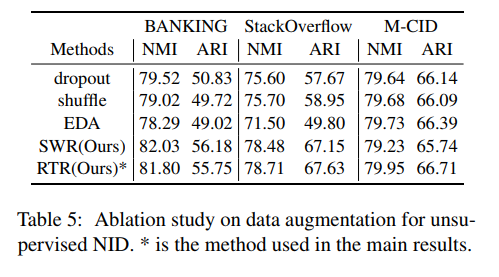

Data Augmentation can boost the performance of an intent classifier. In this post, we will introduce a data augmentation method.

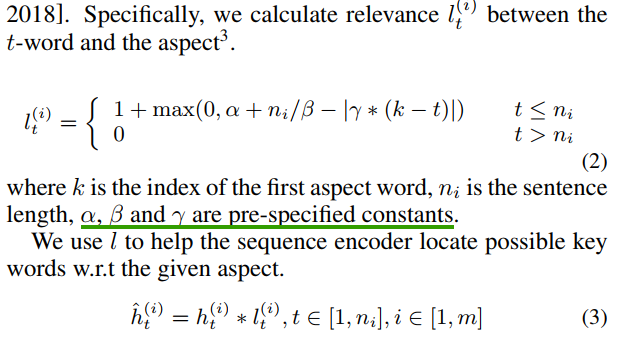

In this article, we will introduce a way to compute the relevance between words and aspect terms by their distance in a sentence. Meanwhile, we also propose two main problems for this method.

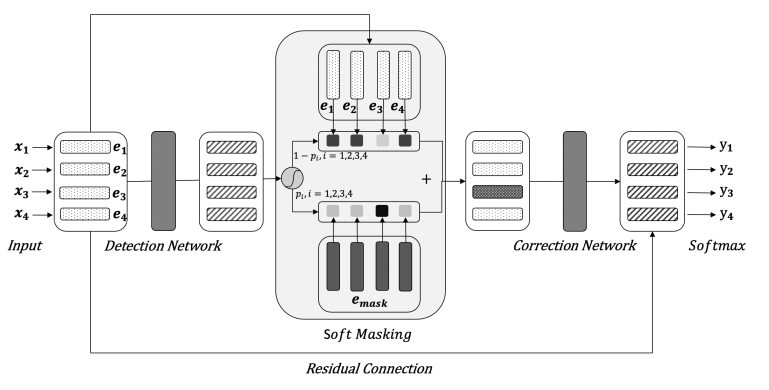

In NLP, we do not mask any input embeddings for Bert in text classifcation task. However, in paper: Spelling Error Correction with Soft-Masked BERT proposed a masked method.

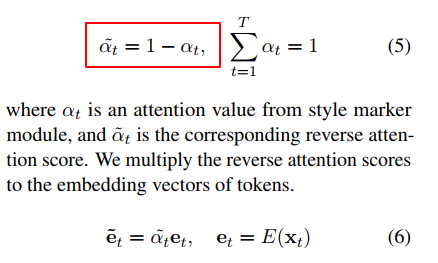

In this article, we will introduce what is reverse attention and how to use it in text style transfer task.

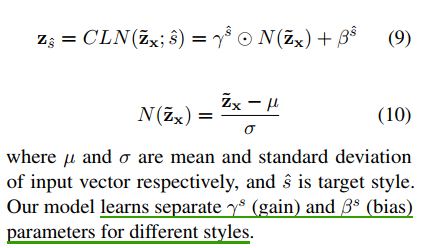

Conditional Layer Normalization can allow us to normalize a representation based on different targets or features.

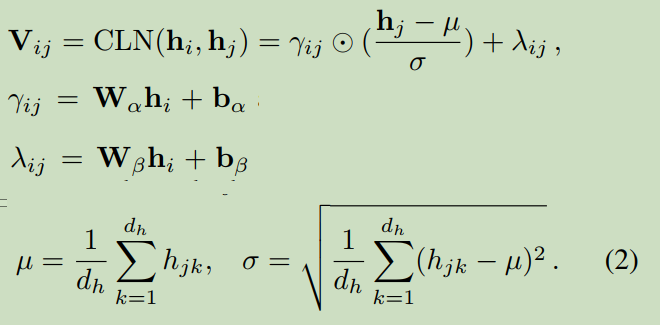

Conditional Layer Normalization (CLN) is used in paper: Unified Named Entity Recognition as Word-Word Relation Classification. In this tutorial, we will introduce it.

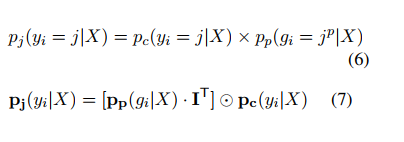

In this article, we will introduce how to make an inference based on a joint distribution. You can use this method in some multi-task problems.

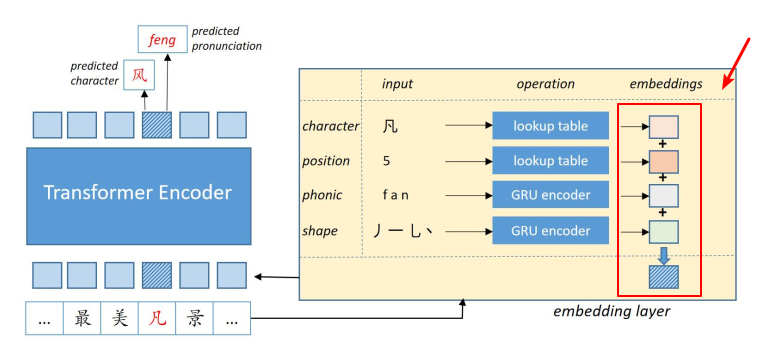

In this article, we will introduce a method to incorporate extra featrues into bert inputs to improve the performance of NLP task.